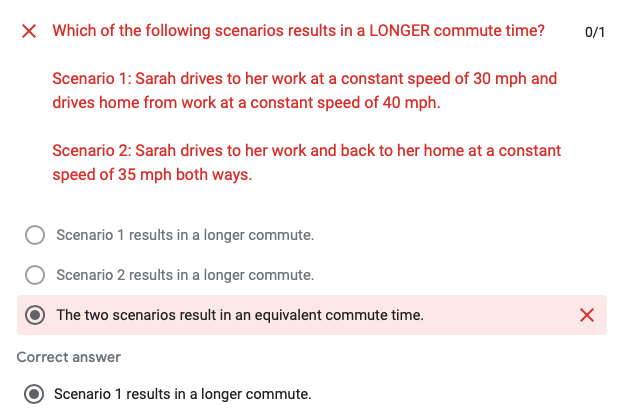

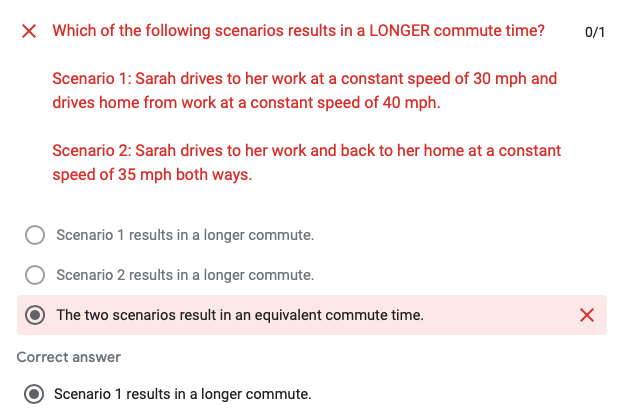

How come scenario 1 results in a longer commute? Where did I get it wrong based on my working below?

Assumes distance = 1 for both scenario

Scenario 1

1 mile = (30 + 40)mph x hour

0.014 hour x 60 min/1hour = 0.84 min

Scenario 2

1 mile = (35 + 35)mph x hour

0.014 hour x 60 min/1hour = 0.84 min

Shouldn’t the answer be they are equal?

The distances when she drives to/from work isn’t the same in the first scenario.

Thanks. Apologies can you elaborate? I must have missed that additional info about the distance in the question - I have re-read it but can’t find any. My original strategy in answering this question was just to put a sample number for distance because I do not know the distance.

Suppose the distance from home to work is 120 miles. Find the time taken in the first scenario.

I still get the same answer ie equal

For example,

Scenario 1: 120 / (30 + 40) = 1.71 hour

Scenario 2: 120 / (35 + 35) = 1.71 hour

You’re missing the point. Let’s look at scenario 1:

- She drives 120 miles at 30 mph from home to work → she takes 4 hours for that part

- She drives 120 miles at 40 mph from home to work → she takes three hours for that part

In total, she takes 4 + 3 = 7 hours.

Ah okay! I shouldn’t have add the two speed together. It’s two separate journeys.