-

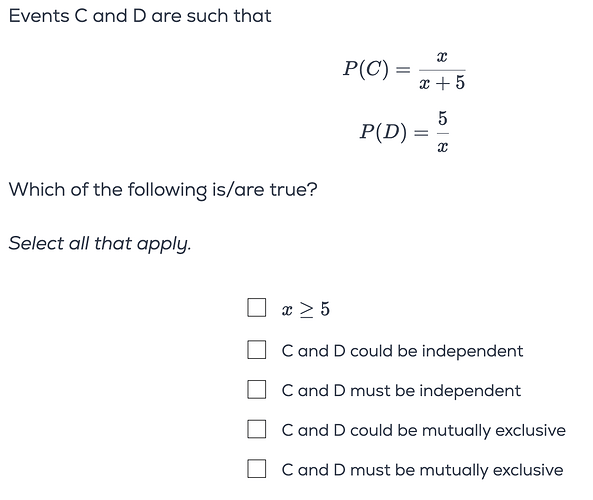

for the question above, my reasoning for eliminating the mutually exclusive case is this: If we set x=5, then P(D)=1, meaning it will always occur. At x=5, P(C) is 1/2, indicating a 50% chance it could happen. Since P(C) is not 0, there’s a possibility that C and D can occur simultaneously. Therefore, they cannot be mutually exclusive. Is my logic correct?

-

I am not sure how to ascertain the independence logic. In the solution video, Greg mentioned that independent events could sum up to >1 . But, dependent events can also sum to be greater than 1. How can we select an answer based on this reason alone? : eg. let’s take a dependent event say,

E1 :: get a number < 6 on a roll of a dice >> P(E1) = 5/6

E2 :: get an even number on the same roll >> P(E2) = 1/2

they sum up to 8/6 which is greater than 1.

So what’s the criteria to determine two events COULD or MUST be independent events for such questions ?

-

Seems to be the right conclusion but wrong reasoning? Why test x = 5 only and why does it matter that P(C) is not 0?

-

By the definition of independence, you have:

\mathbb{P} (C) \cdot \mathbb{P} (D) = \mathbb{P} (C \cap D)

We don’t have an expression for \mathbb{P} (C \cap D), but those events could be independent. The “could” implies that it could either be true or not. “There could be an earthquake tomorrow” doesn’t negate the possibility of no earthquakes tomorrow, right?

The same case for C and D being dependent.

By the definition of mutual exclusivity - Occurrence of one event should automatically rule out the occurrence of the other one. At x=5, there is 100% chance that event D will occur [it is certain that D will occur]. If they could be mutually exclusive, this should’ve automatically ruled out occurrence of event C.

Eg. E1 : Getting a 5 on the roll of a dice.

E2 : Getting a 3 on the roll of dice.

Now if I say that the dice is biased and that P(E1) is 1, then it should also mean that P(E2) is 0. In a way it is similar to Greg’s logic that in case of mutual exclusivity, the sum of probabilities <= 1. so if P(D) is 1, P(C) should 0

Mutually exclusive has little to do with one of the other events having probability 0. It’s more like a “special case”, and not something you should default to while reasoning things out.

In general, you call 2 events (C and D) mutually exclusive when \mathbb{P} (C \cap D) = 0. This is not possible because:

\mathbb{P} (C) + \mathbb{P} (D) is already greater than 1, and so there has to be an intersection, because otherwise you’d have \mathbb{P} (C \cup D) > 1, which doesn’t make sense.

Edit: A probability greater than 1 can make sense depending on how you “norm” your probability space , but hopefully we both are aware that we’re working on the standard measure (probabilities can only range from 0 to 1 inclusive). I guess since we’re here, the crucial property is that measures have to be finite and rescaling them doesn’t affect that, so the probability having values between 0 & 1 is just by convention.